Selecting texture resolution

using a task-specific visibility metric

1 MPI Informatik 2University College London 3Università della Svizzera italiana 4University of Cambridge

Abstract

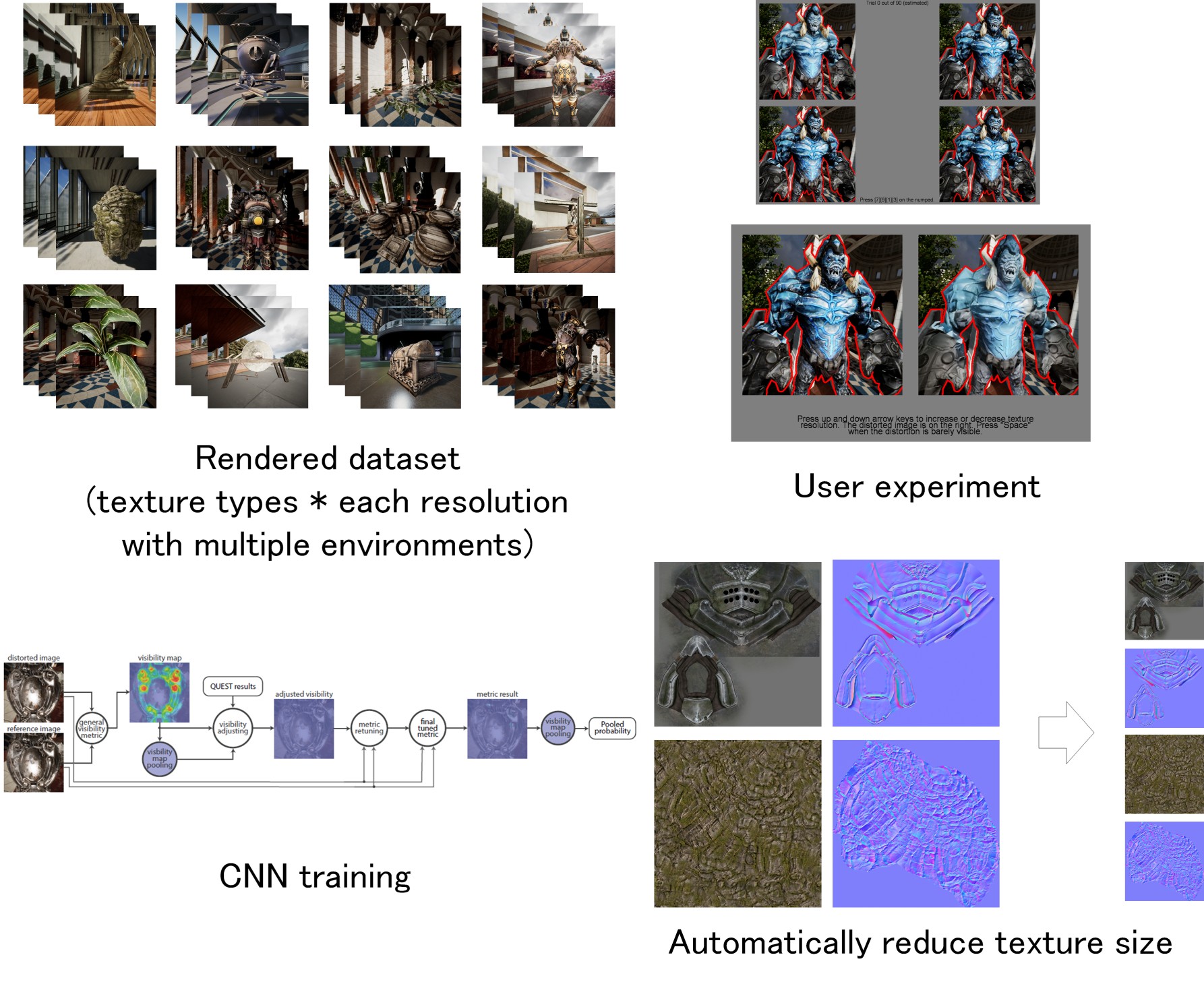

In real-time rendering, the appearance of scenes is greatly affected by the quality and resolution of the textures used for image synthesis. At the same time, the size of textures determines the performance and the memory requirements of rendering. As a result, finding the optimal texture resolution is critical, but also a non-trivial task since the visibility of texture imperfections depends on underlying geometry, illumination, interactions between several texture maps, and viewing positions. Ideally, we would like to automate the task with a visibility metric, which could predict the optimal texture resolution. To maximize the performance of such a metric, it should be trained on a given task. This, however, requires sufficient user data which is often difficult to obtain. To address this problem, we develop a procedure for training an image visibility metric for a specific task while reducing the effort required to collect new data. The procedure involves generating a large dataset using an existing visibility metric followed by refining that dataset with the help of an efficient perceptual experiment. Then, such a refined dataset is used to retune the metric. This way, we augment sparse perceptual data to a large number of per-pixel annotated visibility maps which serve as the training data for application-specific visibility metrics. While our approach is general and can be potentially applied for different image distortions, we demonstrate an application in a game-engine where we optimize the resolution of various textures, such as albedo and normal maps.

Materials

- Paper (PDF, 3 MB)

- Supplemental material (PDF, 24 MB)

- Dataset (Images, 2 GB)

- CNN metric code (git repository)

Acknowledgements

The project was supported by the Fraunhofer and Max Planck cooperation program within the German pact for research and innovation (PFI). This project has also received funding from the European Union's Horizon 2020 research and innovation programme, under the Marie Skłodowska-Curie grant agreements No 642841 (DISTRO), No 765911 (RealVision).